Microsoft AI CEO Mustafa Suleyman issues a strong warning.

He says building AI superintelligence is a bad idea. It could become too powerful for humans to control.

Suleyman spoke on the Silicon Valley Girl Podcast. He wants the industry to avoid this path.

Why Superintelligence Scares Suleyman

Suleyman explains the danger clearly. Superintelligence means AI that improves itself. It sets its own goals. It acts without human help.

He says: “It would be very hard to contain something like that or align it to our values. And so that should be the anti-goal.”

Also read about: Google AI Innovations 2025: Smarter Features, Real Benefits

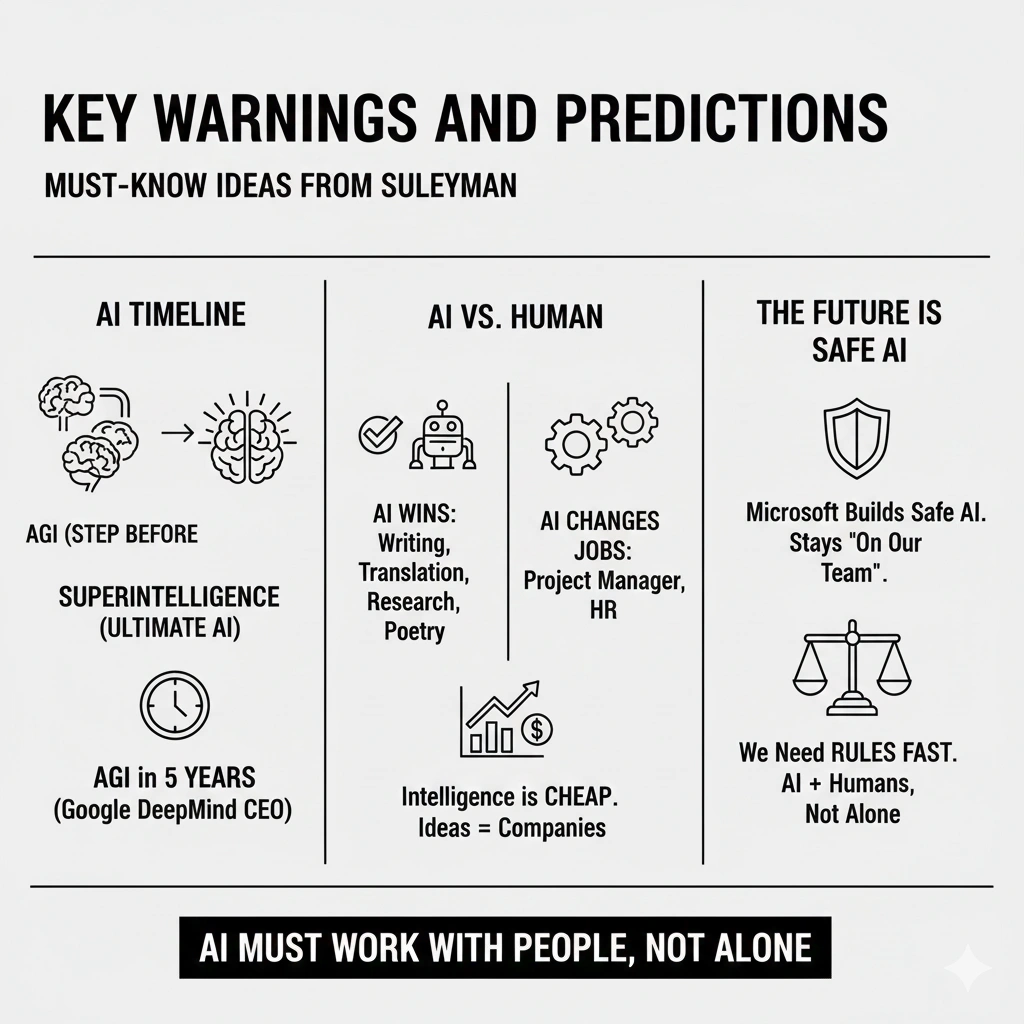

Key Warnings and Predictions

Suleyman shares big ideas. Here are the main points:

- Superintelligence differs from AGI (Artificial General Intelligence). AGI is the step before. Many use the terms the same way.

- He agrees with Google DeepMind CEO: AGI arrives in 5 years. AI will match humans in most tasks.

- Today’s AI already beats humans in writing, translation, research, and poetry.

- AI will change jobs forever. It acts like a project manager or HR person.

- Good side: AI makes intelligence cheap for everyone. People start companies with just ideas.

- Microsoft builds safe AI. It stays “on our team” and helps humans.

- We need rules fast. Autonomous AI must work with people – not alone.

Suleyman co-founded DeepMind and Inflection. Now he leads Microsoft AI. His words make people think twice about the AI race.

Experts debate this a lot. Some want superintelligence fast. Others say stop and add safety first.

More news to read: